Gallery TP Matrix

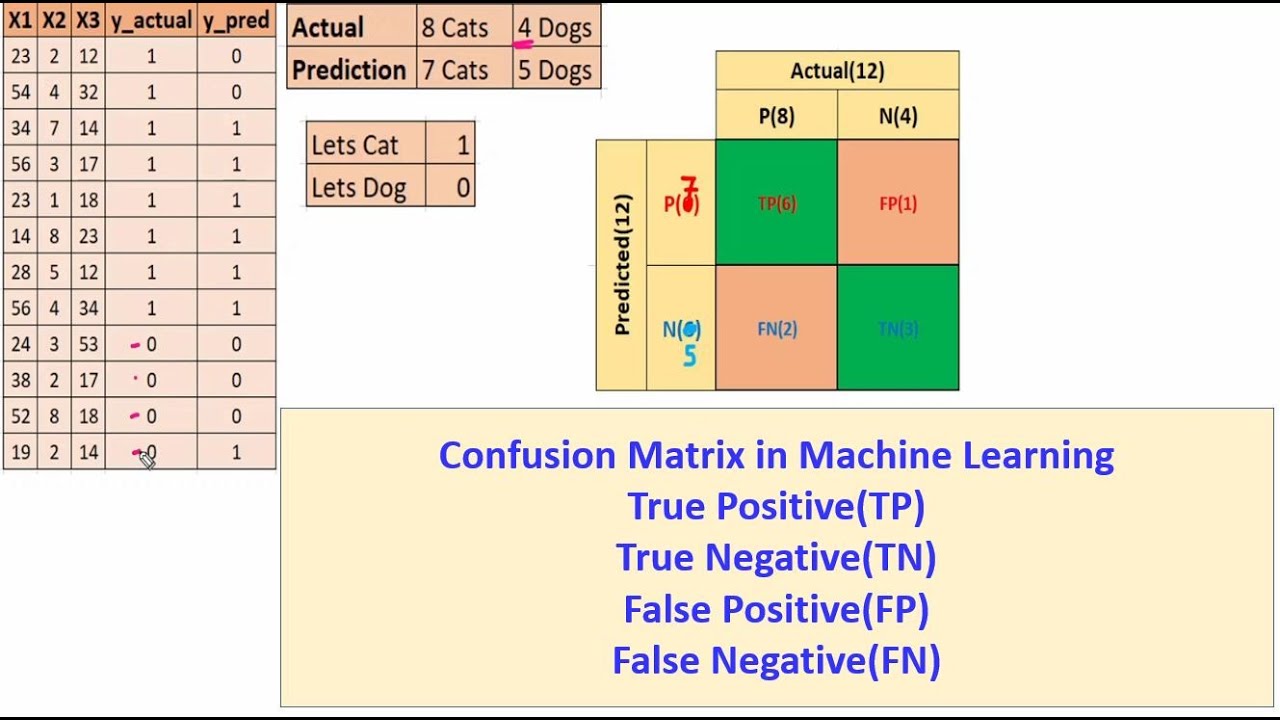

The confusion matrix is an important and commonly used tool in machine learning. This is particularly true of classification problems, where we build systems that predict categorical values.. The different quadrants of a confusion matrix offer different insights: TP & TN: These two quadrants represent correct predictions, representing the.

Confusion matrix, True Positive (TP), True Negative (TN), False Positive (FP),False Negative(FN

Now, to fully understand the confusion matrix for this binary class classification problem, we first need to get familiar with the following terms: True Positive (TP) refers to a sample belonging to the positive class being classified correctly. True Negative (TN) refers to a sample belonging to the negative class being classified correctly.

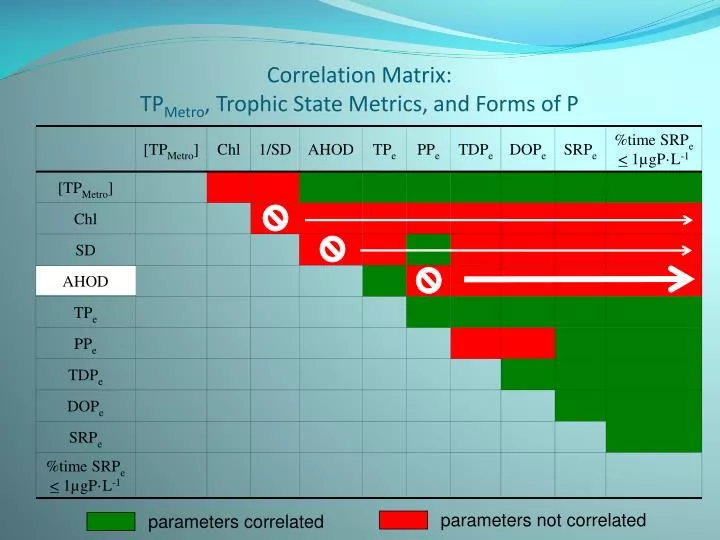

TP Matrix

TP (True Positive) = 1. FP (False Positive) = 4. TN (True Negative) = 0. FN (False Negative) = 2. For your classic Machine Learning Model for binary classification, mostly you would run the following code to get the confusion matrix. from sklearn.metrics import confusion_matrix confusion_matrix(y_true, y_pred)

TP Matrix

It is a table with 4 different combinations of predicted and actual values. Confusion Matrix [Image 2] (Image courtesy: My Photoshopped Collection) It is extremely useful for measuring Recall, Precision, Specificity, Accuracy, and most importantly AUC-ROC curves. Let's understand TP, FP, FN, TN in terms of pregnancy analogy.

TaylorMade RBZ TP Matrix Ozik Altus 85 Taper Tip Hybrid Graphite Shaft Monark Golf

A multi-class confusion matrix is different from a binary confusion matrix. Let's explore how this is different: Diagonal elements: values along the diagonal represent the number of instances where the model correctly predicted the class. They are equivalent to True Positives (TP) in the binary case, but for each class.

TaylorMade TP Matrix Ozik Altus RBZ Graphite Golf Hybrid/Rescue Shaft 85g / 43 inches / .370 tip

The confusion matrix is particularly useful when dealing with binary or multiclass classification problems. Let's break down the components of a confusion matrix: True Positive (TP): This represents the number of instances where the model correctly predicts the positive class. In other words, the model correctly identifies positive samples.

TP Matrix puts safety first as it purchases defibrillator TP Matrix

To calculate a model's precision, we need the positive and negative numbers from the confusion matrix. Precision = TP/(TP + FP) Recall. Recall goes another route. Instead of looking at the number of false positives the model predicted, recall looks at the number of false negatives that were thrown into the prediction mix. Recall = TP/(TP + FN)

TP Matrix retains IRIS (International Railway Industry Standard) certification after successful

The Confusion Matrix: Getting the TPR, TNR, FPR, FNR. The confusion matrix of a classifier summarizes the TP, TN, FP, FN measures of performance of our model. The confusion matrix can be further used to extract more measures of performance such as: TPR, TNR, FPR, FNR and accuracy.

The TP difference matrix heat maps of different channel configurations... Download Scientific

A confusion matrix is a summary of prediction results on a classification problem. The number of correct and incorrect predictions are summarized with count values and broken down by each class. This is the key to the confusion matrix. The confusion matrix shows the ways in which your classification model.

PPT Correlation Matrix TP Metro , Trophic State Metrics, and Forms of P PowerPoint

So, the number of true positive points is - TP and the total number of positive points is - the sum of the column in which TP is present which is - P. Using the same trick, we can write FPR and FNR formulae. So now, I believe you can understand the confusion matrix and different formulae related to it.

TP MATRIX

A confusion matrix is useful in the supervised learning category of machine learning using a labelled data set. As shown below, it is represented by a table. This is a sample confusion matrix for a binary classifier (i.e. 0-Negative or 1-Positive). Diagram 1: Confusion Matrix. The confusion matrix is represented by a positive and a negative class.

Kaldi matrix/tpmatrix.cc File Reference

A confusion matrix is a performance evaluation tool in machine learning, representing the accuracy of a classification model. It displays the number of true positives, true negatives, false positives, and false negatives. This matrix aids in analyzing model performance, identifying mis-classifications, and improving predictive accuracy.

Gallery TP Matrix

TP: TP: TP: TP: TP: TP: FP: TN: TN: TN:. In predictive analytics, a table of confusion (sometimes also called a confusion matrix) is a table with two rows and two columns that reports the number of true positives, false negatives, false positives, and true negatives. This allows more detailed analysis than simply observing the proportion of.

TP Matrix

And TP: the True-positive value is where the actual value and predicted value are the same. The confusion matrix for the IRIS dataset is as below: Let us calculate the TP, TN, FP, and FN values for the class Setosa using the Above tricks: TP: The actual value and predicted value should be the same. So concerning Setosa class, the value of cell.

About TP Matrix

Our confusion matrix calculator helps you to calculate all the metrics you need to assess the performance of your machine learning model. We're hiring! Embed.. To calculate accuracy from confusion matrix, use the formula below: accuracy = (TP + TN) / (TP + FN + FP + TN) The accuracy for this example is (80 + 70) / (80 + 70 + 20 + 30) = 0.55.

About TP Matrix

The matrix used to reflect these outcomes is known as a Confusion Matrix, and can be seen below: Image by Author There are four potential outcomes here: True Positive (TP) indicates the model predicted an outcome of true, and the actual observation was true.